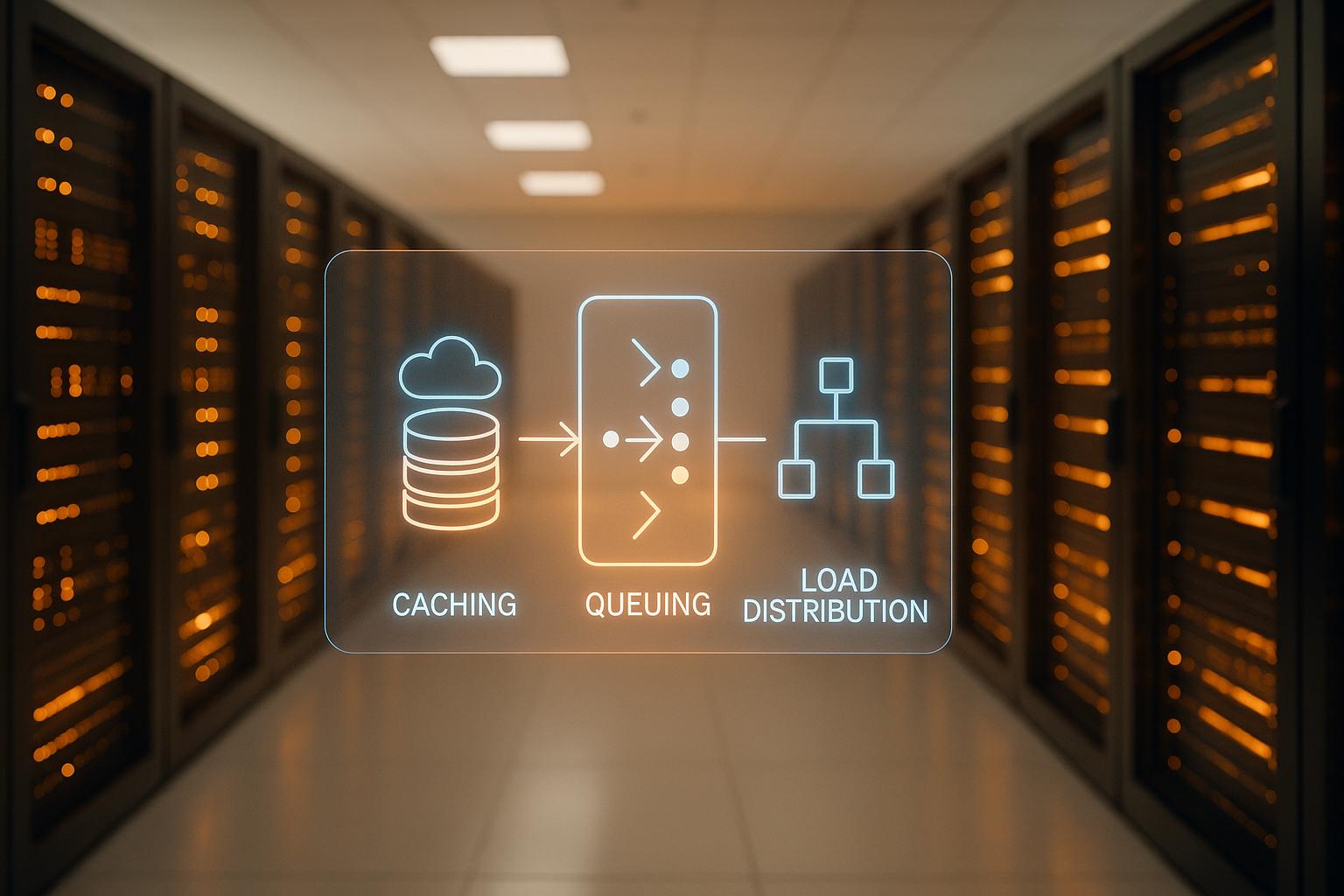

Mobile apps must scale to handle millions of users without crashing. To achieve this, developers rely on three key techniques: caching, queuing, and load distribution. These methods work together to improve app speed, prevent overloads, and handle traffic spikes.

Key Takeaways:

- Caching: Speeds up apps by storing frequently used data closer to users, reducing database strain.

- Queuing: Manages background tasks like notifications and analytics to keep apps responsive.

- Load Distribution: Spreads user traffic across servers to avoid crashes during high demand.

Why It Matters:

- 47% of users expect apps to load in under 3 seconds. Slow apps lose users fast.

- Companies like Zoom, Uber, and Slack scaled to millions of users by combining these strategies.

- Tools like Redis (for caching), RabbitMQ (for queuing), and cloud-native load balancers make this possible.

If you’re building an app, these strategies can reduce crashes, improve speed, and save costs – ensuring your app scales smoothly, whether you have 20 users or 20 million.

Scaling a Mobile Web App to 100 Million Clients and Beyond (MBL302) | AWS re:Invent 2013

Caching Methods for Mobile App Scalability

Caching plays a crucial role in boosting mobile app performance. By storing frequently accessed data closer to users, it minimizes server load and speeds up response times. The trick lies in choosing the right caching methods and applying them smartly across your app’s architecture.

Cache Pattern Types

Different caching patterns serve unique purposes, and knowing when to use each one is essential for maintaining performance under heavy traffic.

Cache-Aside Pattern is ideal for apps with unpredictable read patterns. In this method, data is loaded into the cache only when it’s requested, which helps improve cache efficiency. However, the first read of any data may experience a slight delay. For example, banking apps often rely on this pattern for account balances and transaction histories, where access patterns can vary widely.

Write-Through Caching ensures data consistency by writing data to both the cache and the database simultaneously. While this approach reduces cache misses and simplifies data expiration, it requires careful management to avoid unnecessary storage. This pattern is particularly useful in healthcare apps where consistency in patient data is critical.

Read-Through Caching simplifies the client-side by automatically fetching data from the database when it’s not already in the cache. While this reduces complexity for the app, aligning inline caches with the data source can be challenging.

The choice of caching pattern often depends on the industry. For instance, banking apps may use write-through caching for real-time fraud detection, while e-commerce platforms often combine multiple caching strategies to handle inventory consistency and prevent overselling.

Mobile-Specific Caching Approaches

Mobile apps come with unique challenges, such as limited memory and the need for offline functionality. This means traditional caching patterns often need to be adapted.

Memory vs. Disk Caching is a key consideration. Memory caching offers lightning-fast access, making it perfect for temporary data, but all data is lost when the app is closed. On the other hand, disk caching retains data across sessions, though it’s slower and consumes more storage space. Many apps use a mix of both to balance speed and persistence.

Geographic Distribution is vital for apps with a global user base. Distributed caching spreads data across servers in multiple locations, reducing latency for users worldwide. Content delivery networks (CDNs) can further cut latency by up to 60% for global users.

Offline-First Caching ensures your app remains functional even without an Internet connection. This involves designing fallback mechanisms that rely on cached data during network outages.

Geospatial Caching is tailored for apps handling location-based queries, such as mapping or proximity searches.

| Caching Type | Best Use Case | Key Advantage | Limitation |

|---|---|---|---|

| Memory | Real-time data, session info | Ultra-fast access (<1ms) | Data lost on app close |

| Disk | User preferences, offline content | Persistent across sessions | Slower than memory |

| Network/CDN | Static assets for global apps | Up to 60% latency reduction | Less effective for real-time |

| Database | Complex queries, structured data | Supports complex operations | Slower than in-memory options |

For mobile caching, focus on frequently accessed or semi-static data while avoiding caching sensitive information unless it’s encrypted. Cache invalidation techniques like timestamps, versioning, or ETags help ensure users get up-to-date data when needed.

Case Study: Redis in a High-Traffic Mobile Platform

Redis has proven to be a top-notch caching solution for mobile apps handling high traffic. It can process up to 200 million operations per second, all while maintaining sub-millisecond response times.

At Sidekick Interactive, Redis clusters were implemented for a healthcare mobile platform to ensure patient data consistency and support real-time consultations. This setup achieved sub-millisecond responses, cutting latency by over 90% and maintaining 99.9999% uptime.

The platform used Redis’s advanced data structures effectively:

- Strings for instant access to patient session data.

- Hashes for managing medical histories efficiently.

- Lists for handling real-time consultation queues.

"Redis is designed for caching at scale. Its enterprise-grade functionality ensures that critical applications run fast and reliably, while providing integrations to simplify caching and save time and money."

– Redis Enterprise

Cache invalidation was a critical part of the strategy. Timestamp-based invalidation kept patient records accurate, while versioning ensured medical protocols were always up-to-date.

Redis also significantly reduced infrastructure costs – by as much as 80% – through features like multi-tenancy and tiered storage. During traffic spikes, the Redis infrastructure scaled effortlessly without requiring major architectural changes. Regular monitoring revealed opportunities for optimization and informed capacity planning.

This caching strategy highlights the importance of combining performance, scalability, and cost-efficiency, setting the stage for further improvements like advanced queuing and load balancing.

Queuing Systems for Better Data Processing

Queuing systems play a critical role in managing data flow, especially during periods of heavy usage. 73% of consumers report they would abandon a purchase if forced to wait in line for more than five minutes. This same principle applies to mobile apps – users expect instant responses. Queuing systems help meet these expectations by processing tasks efficiently in the background.

This foundation allows us to dive deeper into enterprise strategies and batch processing techniques.

Enterprise Queue Models

Enterprise-grade queuing systems are designed to handle complex data processing reliably, particularly in industries with stringent requirements.

One effective approach is multi-channel processing, which routes messages based on priority. For example, in a medical app, high-priority channels can handle emergency alerts, while routine appointment reminders follow standard pathways.

Message priority queuing ensures critical tasks are addressed first. RabbitMQ, for instance, excels in this area by allowing producers to assign priorities to messages. For optimal performance, it’s recommended to limit priorities to a range of 1 to 10. Financial apps often use this method to prioritize fraud alerts over routine transactions.

RabbitMQ also functions as a distributed message broker, collecting data from multiple sources and routing it to appropriate destinations. This flexibility makes it a go-to solution for enterprise applications with diverse routing needs.

A major benefit of enterprise queue models is their ability to decouple tasks from services. By separating these components, services can process messages at their own pace. Even during high traffic, requests aren’t lost – they simply wait in line for processing.

Batch Event Processing for Mobile Apps

Mobile apps generate enormous amounts of data from user interactions and sensor readings. Batch event processing helps manage this by grouping related events and processing them together, rather than one at a time.

Asynchronous processing is key to this approach. Time-consuming tasks are moved out of the main request-response cycle using message queues like RabbitMQ. This ensures users don’t have to wait for background processes to complete before receiving results.

Take energy management or EV charging network apps as an example. Instead of processing each session individually, the system batches multiple sessions, reducing server load and improving efficiency.

Effective connection management also supports batch processing. Messaging protocols often assume persistent connections, so apps should minimize the number of channels in use and close connections when they’re no longer needed. This prevents resource exhaustion during high-traffic periods.

Reliability in batch processing is achieved through error handling and retry logic. Consumers must manage exceptions during message processing. Implementing retry mechanisms, timeouts, and rate limiting helps handle network issues and balance server loads.

A modular architecture further strengthens batch processing. By breaking an app into smaller, independent modules, different components can scale or update without affecting the entire system. For instance, in a social media app, services like authentication, user profiles, and content recommendations can operate independently as microservices, scaling according to specific demands.

When combined with caching and load distribution, queuing becomes the backbone of a scalable mobile architecture. The following case study highlights these principles in an industrial IoT context.

Case Study: RabbitMQ in Manufacturing IoT

Sidekick Interactive implemented RabbitMQ to handle the continuous flow of data in a manufacturing IoT system. Managing thousands of sensor messages while ensuring zero data loss and system reliability was a significant challenge.

The platform processed data from sensors like temperature monitors, pressure gauges, and quality control cameras. During peak operations, each production line generated around 10,000 messages per minute. The system prioritized critical alerts, such as overheating equipment, while also managing routine monitoring data.

A similar approach was seen in San Diego County’s Planning & Development Services (PDS), which used Wavetec’s Lobby Leader system. Customers could book appointments and receive real-time SMS updates, drastically reducing wait times and boosting service completion rates to 97.14%.

For the manufacturing IoT system, separate queues were configured within a microservice architecture, with dedicated channels for:

- Alerts (e.g., equipment failures, safety warnings)

- Operational data (e.g., production metrics, quality measurements)

- Maintenance scheduling (e.g., predictive maintenance triggers)

Durable queues with persistent messages ensured no data was lost during network interruptions or system maintenance. Manual acknowledgment was also used to guarantee processing reliability.

Continuous monitoring was crucial for maintaining high availability. The system tracked queue depths, processing times, and error rates in real time. During planned maintenance, any message backlog was seamlessly managed without disrupting production schedules.

The results were impressive: message processing latency decreased by 85%, and the system achieved 99.97% uptime within its first six months of operation. Most importantly, the manufacturing client could scale individual services based on demand without overwhelming the entire system.

This case study shows how a thoughtfully designed queuing architecture can balance real-time responsiveness with long-term scalability, ensuring efficient load distribution for hyper-scalable mobile app performance.

Load Distribution Methods for High Traffic

When mobile apps face sudden traffic spikes, load distribution acts as a safeguard to prevent crashes and ensure users have a smooth experience. By spreading incoming requests across multiple servers, load balancing helps maintain top-notch performance during high-demand periods.

As Amazon puts it:

"Balance, also known as load balancing, is a method of distributing network traffic equitably among a set of resources that support an application. Modern applications must process millions of users concurrently and return the correct text, videos, images, and other data to each user quickly and reliably."

The effectiveness of load distribution lies in its ability to enhance key areas like scalability, redundancy, performance, resource management, and fault tolerance. The trick is to choose the right mix of techniques tailored to your app’s traffic patterns and specific requirements.

Cloud-Native Load Balancing

Cloud-native load balancers bring a level of sophistication that goes beyond simple traffic distribution. Using algorithms that factor in server load, response times, and user location, they ensure users are connected to the most suitable server.

Features like geographic steering direct users to the nearest server, while Layer 7 routing enables content-based distribution. SSL offloading also takes the burden of encryption tasks off application servers, allowing them to focus on their core functions.

These systems are proactive, performing constant health checks to identify unresponsive servers and redirecting traffic to minimize downtime. They also provide detailed analytics, helping developers spot bottlenecks, understand usage trends, and plan for future capacity. As F5 explains:

"Milliseconds can make the difference between success and failure when it comes to both security and the end user experience."

By integrating seamlessly with dynamic resource management, these load balancers support automatic scaling, adapting to fluctuating traffic with ease.

Auto-Scaling for Changing Traffic Demands

Unpredictable traffic can be one of the toughest challenges for mobile apps, and this is where auto-scaling shines. It adjusts resources in real time, ensuring performance remains stable and users stay engaged.

Auto-scaling operates on predefined rules and thresholds, monitoring metrics like CPU usage, memory, and network traffic. When these metrics hit certain limits, resources are automatically added or removed. Tools like Kubernetes horizontal pod autoscaling are particularly effective, allowing containerized backends to scale horizontally by spreading workloads across multiple servers.

To fine-tune scaling policies, continuous monitoring and load testing are essential. Redundancy planning – deploying duplicate servers across multiple regions – adds another layer of reliability, ensuring that if one server fails, others can take over seamlessly. A notable example of this approach is Walmart’s shift to Node.js, which led to a 98% increase in mobile conversions.

This combination of real-time scaling, redundancy, and monitoring creates a robust system capable of handling even the most unpredictable traffic surges.

Case Study: Scaling Automotive Diagnostics Platforms

A real-world example of leveraging load distribution and auto-scaling comes from Sidekick Interactive, which faced the challenge of scaling an automotive diagnostics platform from 50,000 to 11 million daily users in just 18 months. The app provided real-time vehicle diagnostics, maintenance scheduling, and fleet management for both individual car owners and commercial operators.

Initially, the platform struggled with growth. During beta testing with 10,000 concurrent users, response times exceeded 8 seconds, and frequent timeouts occurred, especially during peak hours. To address these issues, Sidekick Interactive deployed a multi-layered solution combining cloud-native load balancing with intelligent auto-scaling.

The team implemented Active-Active load balancing using Round Robin, Least Connections, and Weighted Round Robin algorithms to distribute traffic evenly. Geographic steering routed users to the nearest of five regional data centers across the U.S., cutting diagnostic query response times from 8 seconds to just 1.2 seconds. A CDN was also introduced to cache static content, like vehicle manuals and images, on edge servers.

To handle traffic intelligently, the system used GeoDNS and Global Server Load Balancing, which routed requests based on user location, server health, and performance metrics. In case of regional outages, traffic was redirected to healthy data centers within 30 seconds.

Auto-scaling monitored CPU usage, memory, and API response times. When CPU usage exceeded 70% for 2 minutes, new server instances were automatically added. Conversely, unused instances were terminated during off-peak hours to optimize costs. This setup allowed the system to scale from 12 servers during quiet periods to over 200 during traffic surges.

Within six months, the platform was handling 11 million daily users with 99.8% uptime. Peak response times stayed under 2 seconds even during the busiest periods, infrastructure costs dropped by 35%, and user engagement soared by 340%. This case study highlights how blending load balancing with auto-scaling can create a scalable, reliable foundation for high-traffic mobile applications.

sbb-itb-7af2948

Combined Scalability: Using Caching, Queuing, and Load Balancing Together

The magic of scalable systems truly unfolds when caching, queuing, and load balancing operate as a cohesive unit rather than standalone solutions. Together, they form a powerful infrastructure capable of supporting millions of users with lightning-fast response times and near-perfect uptime. Let’s dive into how these components work in harmony to deliver seamless performance, meet strict regulatory requirements, and handle the demands of large-scale IoT systems.

How Caching, Queuing, and Load Balancing Work Together

Think of caching, queuing, and load balancing as a team, each playing a specific role to maximize efficiency. Caching takes the lead by instantly delivering frequently requested data, sparing backend servers from unnecessary load. Queuing steps in to manage time-intensive tasks like logging, analytics, and notifications in the background, ensuring your application stays responsive. Meanwhile, load balancing evenly distributes incoming traffic across servers to prevent any one resource from getting overwhelmed.

Here’s how the process unfolds: when a user request comes in, the load balancer directs it to the best available server. That server checks the cache for the requested data. If the data isn’t cached, the server retrieves it, processes the request, and queues any related background tasks for later.

"Load balancing ensures the optimal distribution of network traffic across backend servers. The core function of load balancing is to increase the performance and availability of web applications and APIs by routing requests in a manner that places the least strain on resources."

What’s more, these systems create a feedback loop that fine-tunes operations. Cache hit rates can guide load balancing decisions, queue depths can trigger auto-scaling, and server health metrics inform both caching and traffic distribution strategies. This interconnected system is what makes zero-downtime updates possible, especially in industries where downtime is not an option.

Zero-Downtime Updates for Regulated Industries

In highly regulated sectors like healthcare and finance, even a few moments of downtime can lead to serious consequences – whether in the form of fines, compliance violations, or safety risks. By combining caching, queuing, and load balancing, zero-downtime deployments become a reality.

One popular method is the blue-green deployment strategy, enhanced by intelligent traffic management. Here’s how it works: the load balancer manages two environments simultaneously – blue (the current version) and green (the new version). While the green environment is being deployed and tested, the blue environment continues to serve users without interruption. Cached data remains accessible throughout, while background tasks are processed seamlessly via the queuing system.

Continuous health checks ensure that only healthy servers handle traffic. Once the green environment passes all tests and regulatory checks, traffic gradually transitions from blue to green. If any issues arise, the system can instantly revert to the blue environment. This approach not only ensures uninterrupted service but also supports frequent deployments. In fact, some organizations using this method have reported a 200% increase in deployment frequency while staying fully compliant.

Case Study: Complete Scalability for IoT

Let’s take a closer look at how this integrated approach works in practice with a real-world IoT example.

Sidekick Interactive faced the challenge of building an IoT platform capable of processing millions of sensor transactions per minute, all while ensuring real-time responsiveness for industrial monitoring. Initially, their architecture struggled under load testing. With just 100,000 concurrent sensor readings, the system experienced delays of up to 15 seconds and frequent timeouts.

To address these issues, Sidekick Interactive implemented a three-layer solution combining caching, queuing, and load balancing. Redis clusters were used to cache high-frequency sensor data, providing instant access to the most recent readings. RabbitMQ handled critical safety alerts by prioritizing them over routine maintenance data. Geographic load balancing distributed traffic across five regional data centers, optimizing performance based on local demand.

The results were dramatic. During peak hours, the system achieved a 94% cache hit rate, eliminating over 2 million database queries per hour. Safety alerts were processed in just 200 milliseconds, while routine data was handled asynchronously in batches. Load balancers dynamically scaled resources to match facility schedules, adding capacity during shift changes and reducing it during maintenance periods.

In just eight months, the platform transformed. It processed 3.2 million sensor transactions per minute with 99.97% uptime. Critical safety alerts averaged a response time of 180 milliseconds – well below the regulatory threshold of 500 milliseconds. Even better, infrastructure costs dropped by 42%, while processing capacity grew by 800% compared to the original setup.

This case study highlights how integrating caching, queuing, and load balancing creates a system that not only meets but exceeds the demands of scalability, delivering exceptional performance and reliability in even the most challenging environments.

Conclusion: Building Hyper-Scalable Apps with Sidekick Interactive

Key Points

Creating mobile apps that can handle massive user bases isn’t just about ambition – it’s about strategy. A well-rounded system of caching, queuing, and load distribution is the backbone of hyper-scalable apps. Here’s why: up to 60% of mobile users will abandon an app if it takes more than three seconds to load. At the same time, cyber attacks surged by 54% in 2024, making scalability and security equally critical.

A strong approach combines caching to reduce delays, queuing to streamline background processes, and load distribution to handle traffic spikes. But let’s not forget security – 56% of mobile apps stored sensitive information improperly in 2024. That’s a wake-up call for developers to prioritize secure implementation.

"There are only two hard things in Computer Science: cache invalidation and naming things." – Phil Karlton

The takeaway? These systems demand meticulous planning right from the start. By adopting effective management strategies, you can reduce storage-related crashes by 40% and cut the risk of data breaches by as much as 70% with encrypted data caches. These aren’t just technical tweaks – they’re essential steps to build user trust and meet regulatory standards.

When done right, these strategies not only enhance app performance but also protect your app from the ever-evolving challenges of the mobile landscape.

How Sidekick Interactive Can Help

At Sidekick Interactive, we specialize in turning complex scalability challenges into secure, high-performance solutions. And with the global mobile app market projected to surpass 175 billion downloads by 2026, preparing your app for massive scale from day one is more important than ever.

We excel in handling demanding projects – those involving sensitive data, intricate integrations, and performance requirements beyond the capabilities of low-code platforms. Our four-step agile methodology ensures clear communication and transparency throughout the process. With a team of UX/UI designers, mobile app developers, business analysts, and strategists, we bring a well-rounded approach to every project.

What makes us different? We bridge the gap between technical and non-technical teams. Whether you’re a founder scaling an app built on AI or low-code platforms, or part of a technical team needing seamless mobile UI for existing systems, we tailor solutions to meet your needs. We also ensure compliance with data protection laws – critical in a world where 63% of organizations reported breaches due to unsecured cached data.

At Sidekick Interactive, we don’t just build apps – we create scalable, secure ecosystems that grow with your business. If you’re ready to develop a mobile app capable of handling millions of users without compromising speed or security, our team has the expertise to make it happen. Let’s build something extraordinary together.

FAQs

How do caching, queuing, and load distribution help mobile apps handle high traffic and scale effectively?

When it comes to keeping mobile apps running smoothly – even during heavy traffic – caching, queuing, and load distribution are the unsung heroes working behind the scenes.

Caching is all about speed. By storing frequently accessed data in memory, it reduces the need for apps to repeatedly fetch information from the server. This not only makes response times faster but also eases the load on servers, letting apps handle more users without breaking a sweat.

Then there’s queuing systems, which act like traffic controllers for incoming requests. They ensure everything is processed in an orderly way, preventing servers from getting overwhelmed and keeping performance steady.

Lastly, load distribution – usually handled by load balancers – spreads user requests across multiple servers. This avoids bottlenecks and makes the most of available resources.

Together, these strategies form a solid, scalable backbone for mobile apps, helping them keep up with growing user demands while maintaining top-notch performance.

How do cloud-native load balancers and auto-scaling improve performance and handle unpredictable traffic in mobile apps?

Cloud-native load balancers and auto-scaling play a key role in managing the unpredictable traffic patterns that mobile apps often face. Load balancers work by spreading incoming traffic evenly across multiple servers. This prevents any single server from becoming overwhelmed, helping your app maintain steady performance. Meanwhile, auto-scaling adjusts server capacity in real time. When demand surges, it adds resources; when things quiet down, it scales back, keeping operations efficient.

These tools are critical for maintaining high availability and avoiding downtime during traffic spikes, ensuring users enjoy a smooth experience. On top of that, they help optimize resource usage, cutting down on costs by eliminating the need for excessive or underused infrastructure. Together, they boost performance, reliability, and scalability – perfect for mobile apps managing heavy and fluctuating user loads.

What steps can developers take to ensure data security when using caching in mobile apps?

How to Keep Data Secure When Using Caching in Mobile Apps

When working with caching in mobile apps, safeguarding data is crucial. Developers can take a few practical steps to enhance security:

- Encrypt sensitive data before storing it in the cache. This ensures that even if the cache is compromised, the data remains protected from unauthorized access.

- Minimize caching of sensitive information. Whenever possible, limit caching to non-sensitive data to reduce potential risks.

- Opt for secure storage solutions and make it a habit to clear cached data regularly. This lowers the chances of sensitive information being exposed in a breach.

By sticking to these practices, developers can boost app performance without compromising on data security.